Climate change is one of the most pressing challenges of our time. From extreme weather events to impacts on human health and community wellbeing, the consequences of our changing climate are increasingly severe. Of course, another technological shift is also unfolding: the rise of generative AI. These two phenomena are inherently intertwined, and in this blog post, we will explore the complex relationship between AI and climate change, highlighting both the enormous environmental impacts and potential climate research opportunities this technology presents.

AI Is Power Hungry

Did you know that making one image with generative AI can use nearly as much energy as fully charging your smartphone? Every time you use AI, especially generative AI, the energy costs can be staggering. Actually measuring how much power it takes to run AI has been difficult due to a lack of corporate transparency, but researchers at Hugging Face and Carnegie Mellon recently released a study quantifying the amount of energy required to run various machine learning models and architecture. The least efficient image generation model used 11.49 Wh of energy, or nearly 1 full smartphone charge.

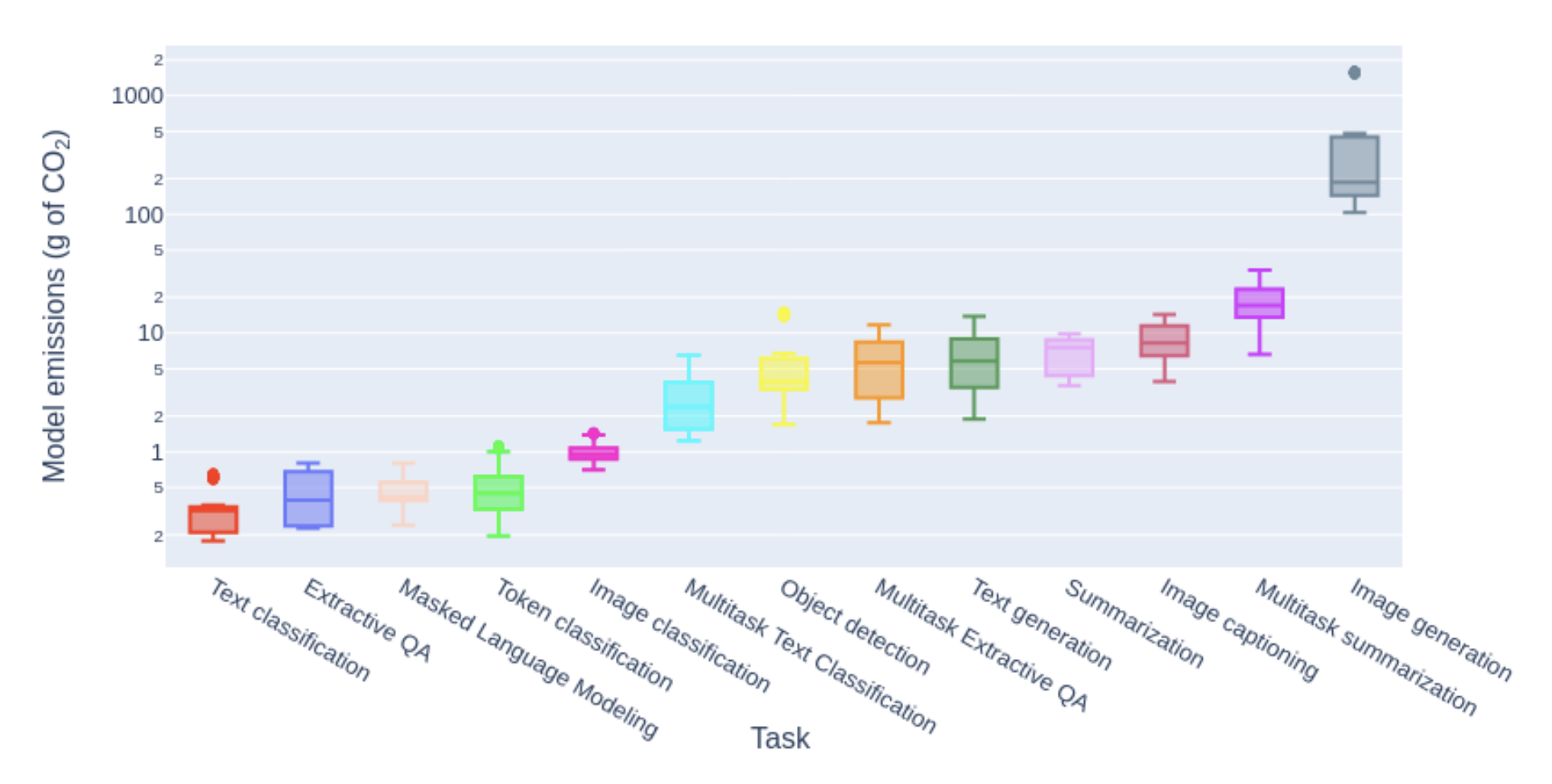

While the exact amount of carbon emitted varies greatly depending on the task, model type, and architecture, they present a few key findings. The modality (the domain type of the input and output) of the task tends to affect the carbon-intensity—for instance, tasks involving images are more carbon-intensive compared to those involving text alone (Figure 1).

Training generative models (think of this as the setup cost for ML models) remains orders of magnitude more energy- and carbon-intensive than inference (think of this as the day-to-day use of ML models), but inference happens far more frequently than model training—as many as billions of times a day for products like Google or ChatGPT. That adds up quickly to be a huge hidden environmental toll.

There are also hopeful recommendations provided. Specifically, generative models are much more energy-intensive compared to task-specific models for the same task. In addition to environmental benefits, using smaller, task-specific models can leverage a variety of benefits.

AI Is Thirsty

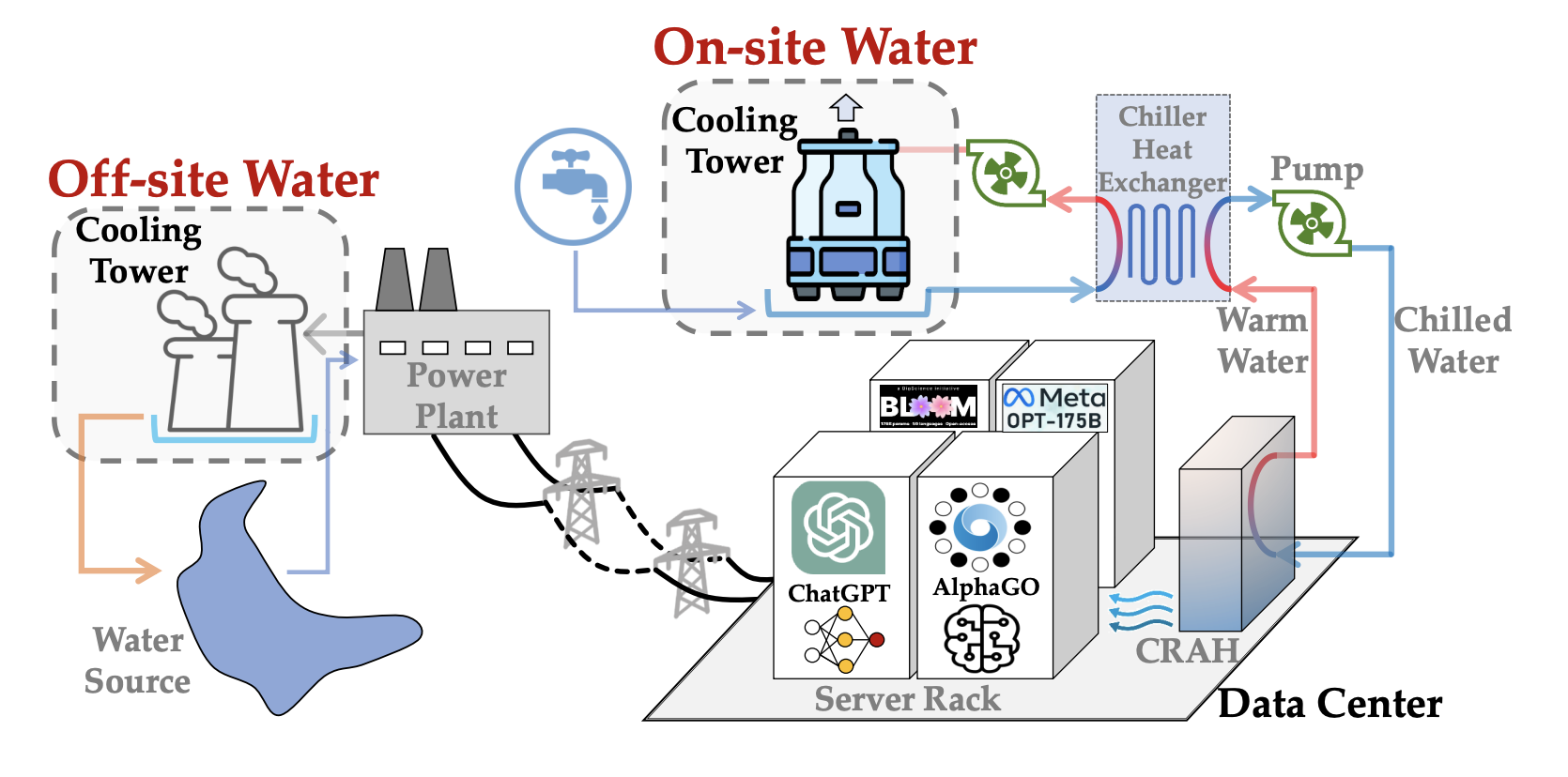

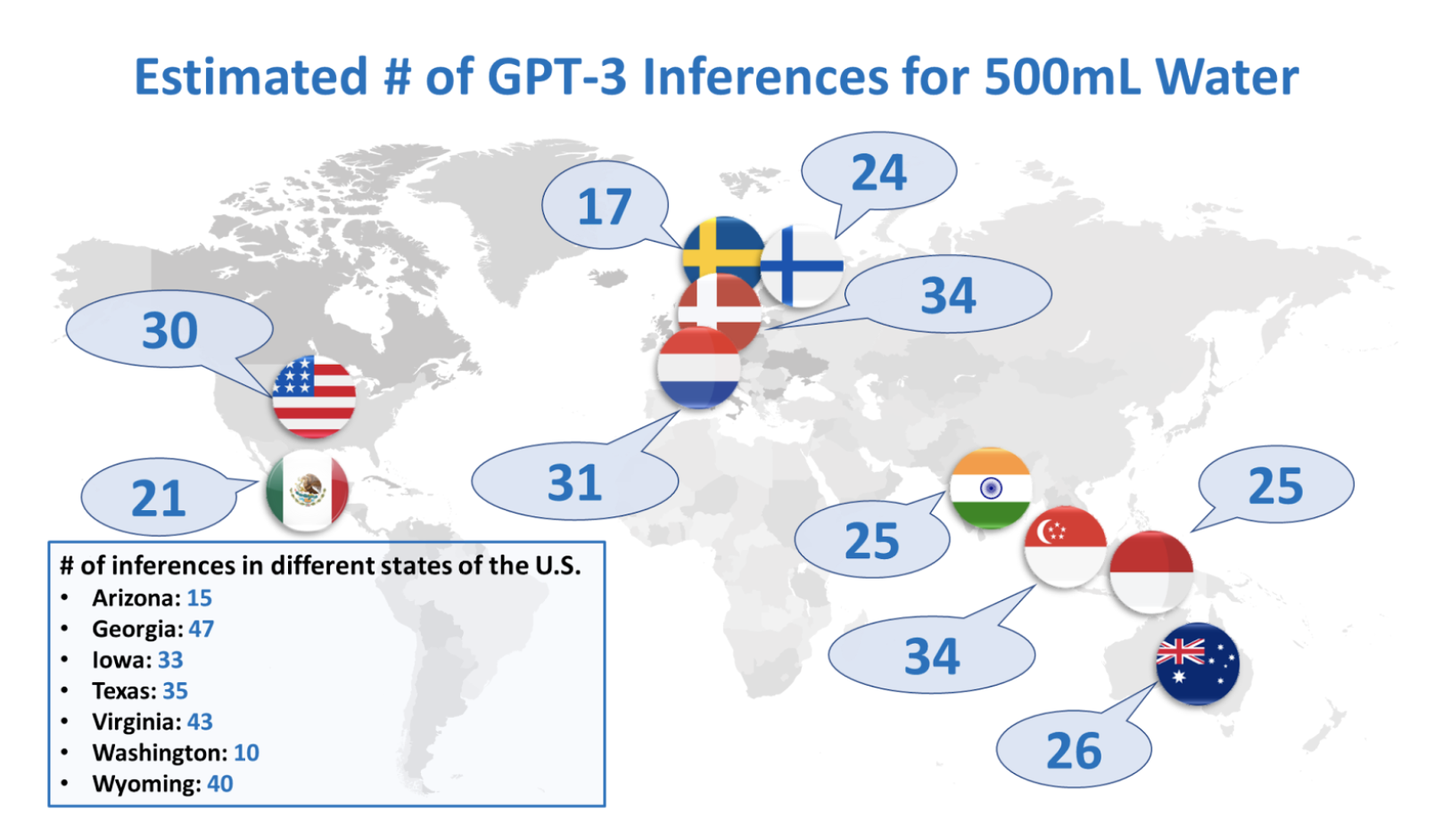

High water usage is another concerning aspect of AI’s environmental impact. Researchers from UC Riverside recently explained how this is so. Large models are often trained and deployed on large clusters of servers with multiple graphic processing units (GPUs). To cool these energy-intensive systems, data centers commonly use cooling towers, which utilize large amounts of clean, fresh water. In fact, the researchers found that training a large language model like GPT-3 can consume millions of liters of fresh water, and running 10-50 inference queries on GPT-3 consumes 500 milliliters of water, depending on how and where the model is hosted. GPT-4, the most recent of OpenAI’s offerings, assumedly has a much larger model size, and thus likely utilizes even more water.

The scale of AI’s water consumption is staggering. The researchers estimate that global AI demand may require 4.2 – 6.6 billion cubic meters of water in 2027, which is more than 4-6x the total annual water usage of Denmark.

This is particularly concerning given that clean, fresh water is already a scarce resource, with 1.1 billion people worldwide lacking access to water, and a total of 2.7 billion finding water scarce for at least one month of the year. At a Microsoft data center in the desert of Arizona, Karen Hao explored the stark contrast between AI advancements and conservation. While Phoenix had just had its hottest summer ever with 55 days of temperatures greater than 110 degrees, just 20 miles away in the suburb of Goodyear, Arizona, Microsoft’s data complex is projected to use about 56 million gallons of water each year—equivalent to the amount used by 670 Goodyear families. And while the largest tech firms have made pledges to be carbon-negative and water-positive, their planned efforts don’t directly support the communities they affect.

The impact of AI on water consumption is already evident in the operations of major tech companies. For example, the researchers report that Google’s onsite water consumption in 2022 increased by 20% compared to 2021, and Microsoft saw a 34% increase over the same period.

The Potential of AI in Climate Research

At the same time as these environmental costs, organizations and corporations tout the impact that AI can bring to climate research and mitigation efforts. From tracking the melting of icecaps to mapping deforestation, many initiatives have harnessed the power of AI to aid the environment. Organizations like Climate Change AI and AI for the Planet are at the forefront of this frontier. And the power of AI for climate has also attracted global attention—at the 2023 UN Climate Change Conference (COP28), much programming focused on leveraging artificial intelligence, while acknowledging the intense energy and natural resource needs of the technology. With how pressing of an issue climate change is, there is no doubt that AI is a powerful and necessary tool to be used.

The Big Problem: No Transparency

There’s a pressing need for more corporate transparency in AI’s energy and water consumption. Currently, it’s extremely difficult to calculate accurate, up-to-date estimates for energy or water usage. Researchers like Sasha Luccioni suggest introducing a type of “energy star rating” for AI models, allowing consumers to compare energy efficiency the same way they might for appliances. This transparency would enable more informed user decisions about AI use and development.

Second, prioritizing efficiency in AI model design and deployment is crucial. Using smaller, task-specific models when possible can significantly reduce energy consumption compared to large, multi-purpose models.

Third, investing in sustainable infrastructure for AI is essential. This includes improving the energy and water efficiency of data centers, exploring alternative cooling methods, and increasing the use of renewable energy sources to power AI systems.

The relationship between AI and the environment is complex and multifaceted. While AI presents significant challenges in terms of energy and water consumption, it also offers powerful tools for climate research and mitigation. As we navigate this technological frontier, it’s important that we remain mindful of AI's environmental implications and strive to develop and deploy AI systems in a way that supports, rather than hinders, our efforts to combat climate change.