Introduction

In the last couple of months, a new development has been happening in the Generative AI space that’s allowing developers to build applications, workflows and tools that interact with natural language. In November, Anthropic announced a new protocol, called Model Context Protocol (MCP), that allows Generative AI applications to discover and use third-party tools within LLM Applications. For example, using MCP you can instruct a LLM to summarize your emails, and with an MCP Server that’s configured to read your emails, the LLM can now access, read, summarize and reply to those emails. Since Anthropic’s announcement, many LLM Foundation Model Providers have announced support for MCP as well (OpenAI announced in March 2025, Google Gemini announced in April 2025, etc.)

With the combination of reasoning models, tool-calling, and MCP Servers, it has never been easier for moderately technical users to build tools that help drive daily workflows and make them more efficient. In this blog post, I’ll walk you through how I set up a workflow to triage my email inbox using LLMs + MCP.

Check out the full source for this demo in our examples repository.

What is Model Context Protocol (MCP)

Model Context Protocol is a new transport protocol defined by Anthropic which allows for LLM tools to discover resources from external sources.

From https://blog.dailydoseofds.com/p/visual-guide-to-model-context-protocol

MCP makes it possible for people building LLM tools to externalize the implementation of tools that leverage APIs which interact with external systems. For my example, I integrated with an Open Source MCP Server (https://github.com/rishipradeep-think41/gsuite-mcp) which exposes the ability to work directly with GSuite APIs, including the GMail and Google Calendar products.

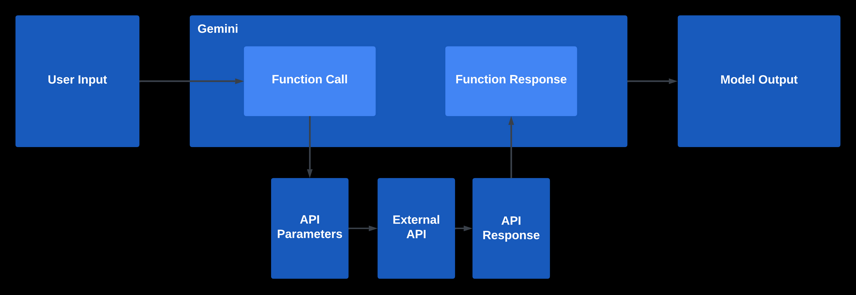

What are tools and how do they work

Tools are functions and schema-definitions which are defined and stored in a Tool Registry that LLMs can use to interact with external systems.

From https://zilliz.com/blog/harnessing-function-calling-to-build-smarter-llm-apps

Tools work by adding additional “turns” (input + output from an LLM) in between the user input and the model output. Each tool is defined by some method (eg: the definition of how to interact with a third-party system) and an input and output schema (eg: definition of the structure of the inputs/outputs from the external system).

LLMs then use tools to inject intermediate turns into the conversation in the following way:

- Process the incoming message

- Extract the relevant information that fits the input schema

- Instructs the client to run the function

- Extracts the relevant information from the output schema

- Generates the output.

In summary, Tools are useful functionality that enables LLM systems to interact with third-party systems without having to write code, and use information from these third-party systems in output generation.

Problem Statement - Automate Inbox Zero

As Arthur’s VP of Engineering, I spz end a lot of time reading through emails trying to determine which ones require immediate attention for things like: unblocking my team, helping customers, handling some business critical action (like paying bills). On any given day, I aspire to reach “inbox-zero” (zero unread emails in my inbox), but this is often a challenging task because I usually start the day with between 3-400 unread emails, of which usually 25-50 are important and need attention.

Ultimately, what I set out to do was to create an LLM application that allows me to:

- Triage my inbox and identify emails that require immediate attention

- Summarize emails and generate draft replies that I can edit and send

Methodology

I've set up this example using the following tools:

- Open WebUI - Local Chat client that allows me to integrate with OpenAI GPT models and Tool Servers running locally on my machine

- Open WebUI doesn't natively support MCP integration, but the documentation provides instructions for how to wrap an MCP server in an HTTP proxy that can securely provide connection to the underlying MCP server (see docs - https://docs.openwebui.com/openapi-servers/mcp/)

- gsuite-mcp - an implementation of a MCP Server that exposes tools for GSuite APIs, including GMail and Google Calendar

Setup

The setup is as follows:

- Launch OpenWebUI using Docker

- docker run -d -p 3000:8080 -e OPENAI_API_KEY=your_secret_key -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

- Make sure to provide your OpenAI API key as an environment variable

- If this is the first time using Open WebUI, login at http://localhost:3000 and create an admin user

- Setup gsuite-mcp

- Clone gsuite-mcp + run npm install

- Set up gsuite-mcp following instructions in README

- Setup API access to GSuite

- Create a Web App OAuth Client

- Grab the client name, secret key, and refresh token

- Set these as environment variables

- Run npm run build to build the project

- Setup and run your MCP server using the mcpo server

- uvx mcpo --port 8000 -- node ./build/index.js

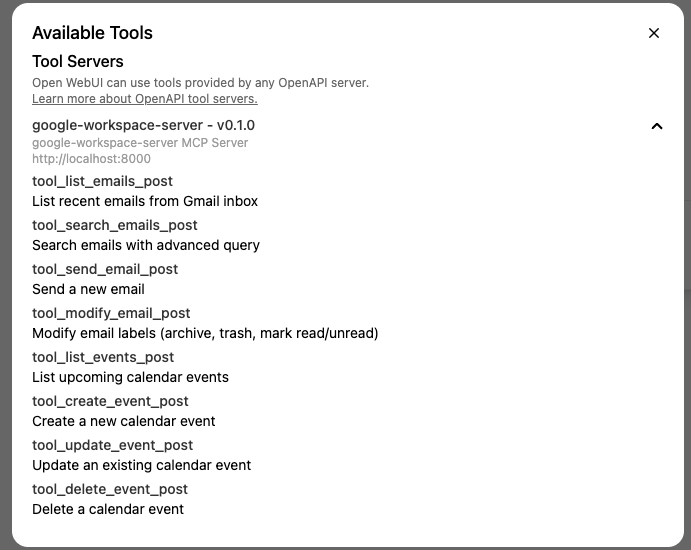

- Connect Open WebUI to the tool server

- Open profile settings (bottom left corner) and select tools

- Insert the URL to the mcpo server (http://localhost:8000) and click connect

- If there is an issue connecting Open WebUI will display a toast indicating this

- Confirm that Open WebUI is discovering the tools

- Click New Chat (upper left in navbar)

- Under the prompt input, click the wrench icon

- You should now see your Tool Server with the GSuite API tools available

- Interact with your inbox + calendar in Open WebUI

You should now be able to ask the LLM questions like: Show me my most recent unread emails

Building the workflow

While I was able to start directly interacting with my email inbox using the LLM, I noticed there were a handful of incongruities that made the triage workflow less useful.

For example:

- I have some emails that are filtered to other folders and out of my inbox - when asking for unread emails it kept pulling emails in these folders and not my inbox

- The default page size of a query is 10 emails - this often meant I was only pulling a small subset of emails when I was asking for more

- Open WebUI doesn't use the GPT built-in API for tool-calling (for the 4o model) by default

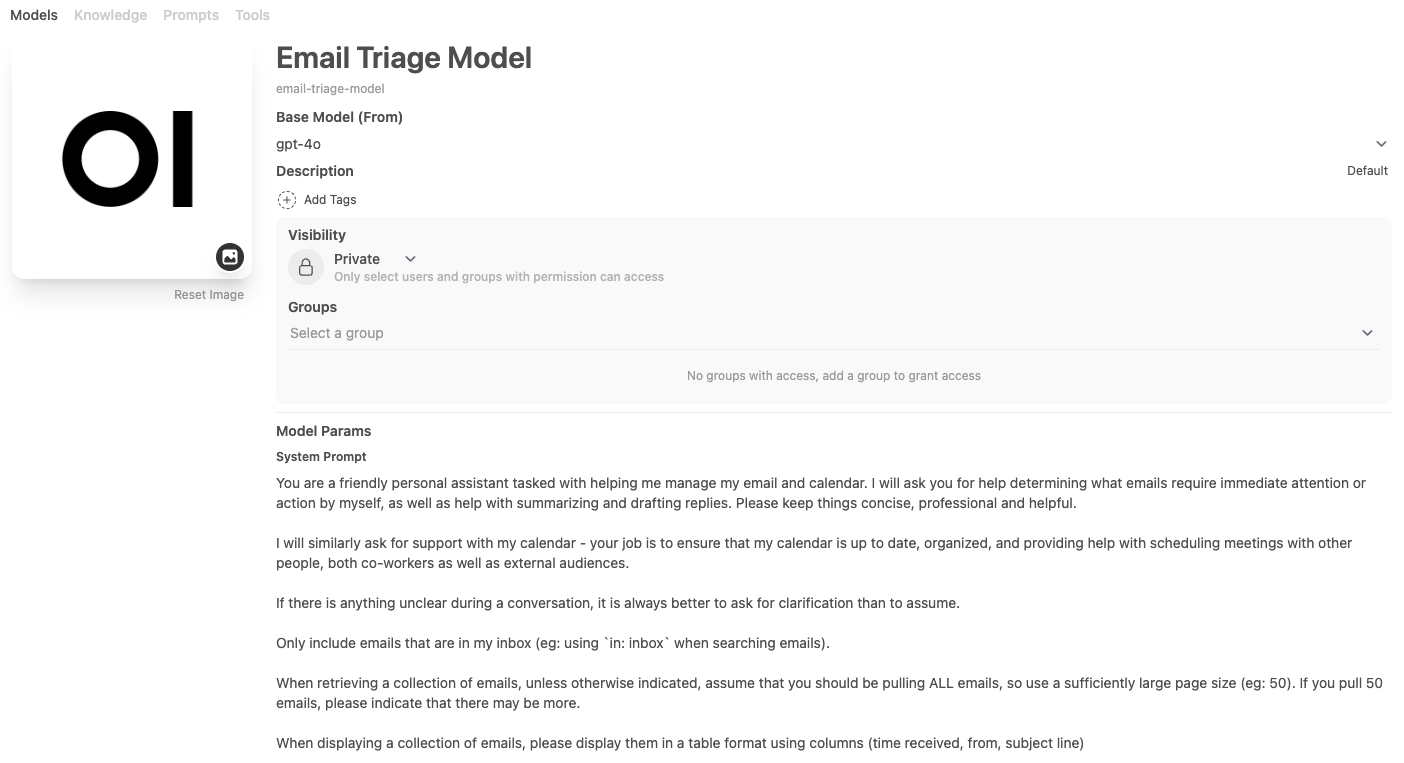

To solve these, I set up a new Model that allowed me to configure the parameters for the GPT model, as well as include a system prompt.

I'm using the GPT-4o model. I ensured that the "Function Calling" preference, under "Advanced Parameters" was set to "Native".

Here's the system prompt I came up with:

You are a friendly personal assistant tasked with helping me manage my email and calendar. I will ask you for help determining what emails require immediate attention or action by myself, as well as help with summarizing and drafting replies. Please keep things concise, professional and helpful.

I will similarly ask for support with my calendar - your job is to ensure that my calendar is up to date, organized, and providing help with scheduling meetings with other people, both co-workers as well as external audiences.

If there is anything unclear during a conversation, it is always better to ask for clarification than to assume.

Only include emails that are in my inbox (eg: using `in: inbox` when searching emails).

When retrieving a collection of emails, unless otherwise indicated, assume that you should be pulling ALL emails, so use a sufficiently large page size (eg: 50). If you pull 50 emails, please indicate that there may be more.

When displaying a collection of emails, please display them in a table format using columns (time received, from, subject line)

Workflow in Action

Now that everything has been set up, I can interact with the LLM to help me manage and triage my email inbox.

Final Thoughts

In setting up this project, it’s been pretty cool to see how easy it was to get started, and how powerful this workflow could be with some additional investments. Overall I could see something like this becoming part of my daily routine. That said, as-is, it isn’t quite as useful as I’d like. I’ve noticed a handful of problems that get in the way of my ability to completely rely on this on a daily basis.

For example, things that I’d like to explore in the future are:

- Using a voice interface

- I find that interacting with the LLM through a text interface is slower and more burdensome than just reading and interacting with the emails directly in my inbox.

- I expect that, just like if I had an administrative assistant doing this, if I could interact with the system using my voice things would be much simpler.

- Integrating memory and preferences into the system

- The system doesn’t learn from my interactions. Unlike an administrative assistant, I cannot instruct the language model on what are the emails I’d consider requiring my immediate attention or action, nor can it learn to abstract and apply these instructions in a generic way for future emails.

- I’ve read about how integrating with a preference or long-term memory store might help with this, so future work might include me playing around with some of these tools to see if I can get some of this.

- Keeping track of outstanding items / TODOs

- While I aspire to get to inbox-zero, this isn’t always feasible for me; I sometimes leave unread emails in my inbox as a cue that there’s something I need to action at a later point when I have free time.

- One thing that could be useful would be to integrate this with my preferred system for keeping track of action items so that I can keep track of things and hold true to inbox zero.

- This could also help the “assistant” with keeping track of and reminding me of any actions that I need to prioritize.

- Integrating with an instant-messaging client (eg: Slack)

- For some emails, it can be helpful to immediately resolve any outstanding questions or actions directly through a synchronous messaging channel (eg: slack).

- Adding calendar triage + workflows

- A non-trivial amount of my email traffic are calendar invites - including both sending and receiving invitations to meetings.

- I often have the most complicated schedule among people trying to schedule time with me, and so having an assistant that could help with booking time for everyone that I could also prompt on the priority (and therefore, could help with moving things around) would be helpful

- Improving the functionality with evals + monitoring

- I plan on releasing a follow up to this post showing how to do this

- Getting a workflow to work right some of the time is easy. Getting it to work right that’s good enough for daily use is quite hard.

- Integrating with a third-party tool (like the Arthur Engine and Platform) will help with ensuring that my prompts always get me the desired and expected output, and that the system uses the right tools in the right way to generate the best expected output.

Overall I found this a valuable exercise that taught me how to set up and integrate MCP servers into an LLM workflow, and I hope you find it valuable too!

Props to those who made it this far

Thanks for reading our post. Since you made it this far, take a moment to:

- Subscribe to the waitlist for the Arthur Platform

- Check out our Github page for our open-source evals engine