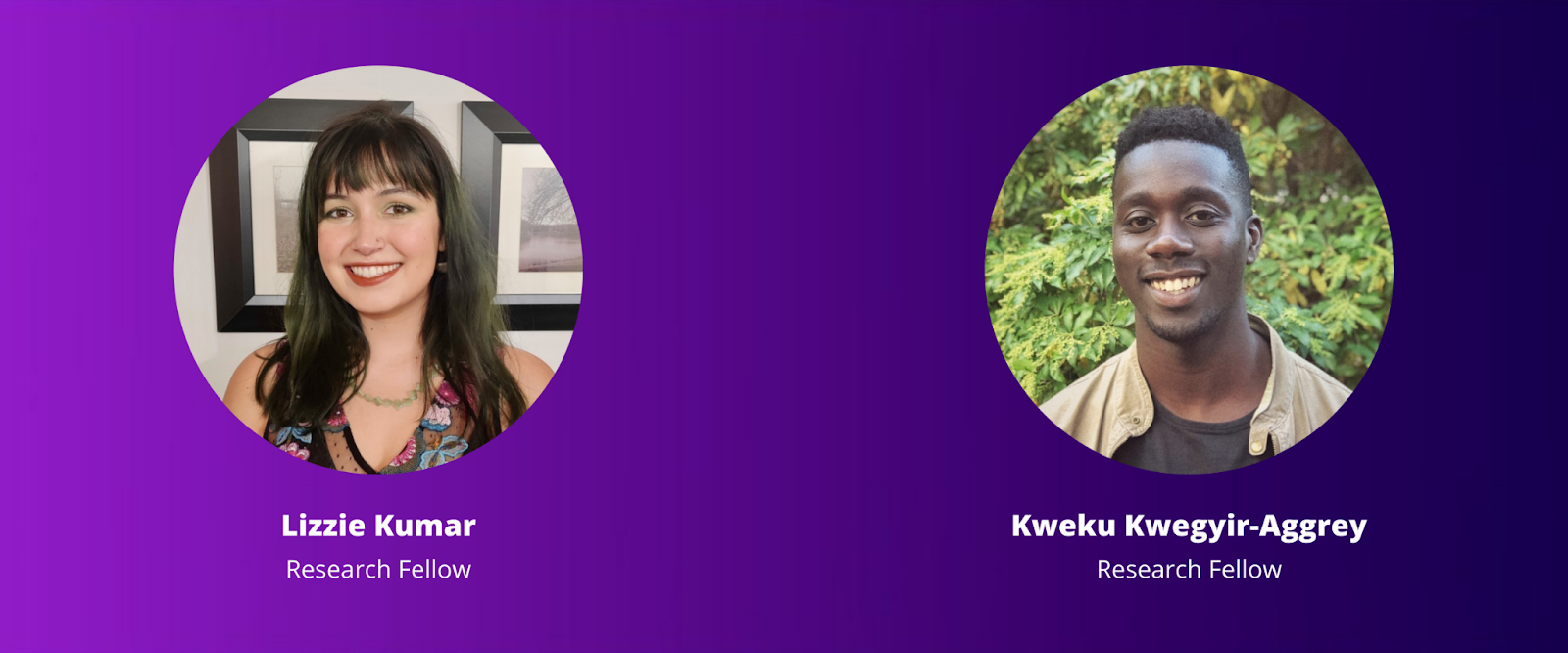

At Arthur, we are thrilled to be working with exceptional rising-star researchers who are tackling the hardest problems in ML Explainability and Fairness. For 2021, we are honored to announce our two ML Research Fellows: Lizzie Kumar and Kweku Kwegyir-Aggrey. We sat down with Lizzie and with Kweku to learn a little more about their research interests and get a teaser of what they’ll be working on this Summer.

Lizzie Kumar

Can you tell us a little bit about yourself?

Lizzie: I am a second-year Ph.D. student at the University of Utah, working with Suresh Venkatasubramanian on explainability and fairness. I come from a background in data science and math, so I have some firsthand experience in what it's like to try to build and deploy a machine learning model and in the practical issues that come up when you try to do that and the ethical issues at hand. So that's kind of what brought me to this research area.

Can you describe a little bit about what you're researching in particular?

Lizzie: My work so far has been on explainability and the limitations of existing methods for explaining machine learning models like LIME or SHAP. I have been sort of interrogating the mathematical limitations of certain methods that people are using to try to summarize and explain and extract information from models to try to describe the relationship between the inputs and the outputs. And I'm also interested in the limitations of the more general framework of asking a question like “explain the importance of different features in a model”. And so I guess my most recent work has been to pick apart that premise and examine which epistemological values are encoded in asking the question that way. More recently, I've also been collaborating on some forthcoming Law Review-type articles about how AI might be regulated in the future and how court cases might play out. I can't say too much about that right now but it's something I'm very much excited to be working on.

When thinking about explainability there seems to be a spectrum of people who think, on the one hand, that explainability is never really useful and on the far side, you have people who would say I don't really care what you're doing; explainability should be a part of it regardless. Where do you fall on that spectrum, and why?

Lizzie: I definitely don't think that ignoring the concept of explainability is a good thing, and simply relying on metrics such as accuracy. But I also don't think that a model being “unexplainable” is inherently a bad thing because it sort of depends on what you call explainable. I mean, something can be understandable, on one level, and also hard to describe on another level or hard to explain when you have a question about something specific like fairness. So, I guess I don't want to place myself anywhere exactly on that spectrum because I think it depends so much on what you mean by explainability, and yeah, it's a fraught question.

Tell us a little bit about your recent work with Leif Hancox-Li which won best paper at FAccT 2021!

Lizzie: Thank you! Yes, so that paper was called “Epistemic values in feature importance methods: Lessons from feminist epistemology” and I guess that paper sort of grew out of me learning about what alternative epistemologies are, and what feminist scholars think about the notion of objectivity in science. I sort of connected that to some observations that I had been making in the explainable machine learning world, where I noticed that a lot of researchers were approaching the idea of explainability from a very sort of… I don't want to say “objective way” but sort of trying to find the one correct explainability metric to perfectly describe their machine learning model. And I guess my perspective is more aligned with the idea that you should be looking at many different metrics, many different ways to describe what a model is doing, and also not just explaining the inner workings of an algorithm but maybe explaining the process through which it was trained. So like what do you know about the people who were training the model and how they collected data and what objectives they had? I think that explaining those kinds of things is just as important as the algorithmic part of a model. Explainability methods tend to be framed in a narrow mathematical way when in fact, explainability could be about so much more.

Do you think that there's a lot that data scientists and machine learning engineers can learn from feminist theory?

Lizzie: Oh that's a great question. I mean, there are so many levels from which you could dive into that. I guess Leif’s and my paper was drawing on some sort of basic observations from feminist theory about how knowledge is situated and how different people view the world in different ways. It discusses how we all have access to parts of the world that other people don't, and you have to sort of use that to your advantage. In science, you know, certain people can bring perspectives to the table that the dominant “masculine”, supposedly “objective” scientific viewpoint, might not have. There are certainly diversity implications for this, in terms of wanting to have people with alternative viewpoints on your team -- that’s a basic takeaway. I think that the diversity message is a really important one, because when you do science or when you do machine learning, or you’re doing math, it's actually people that are doing those things, at the end of the day, it's not like some science machine that is making science go forward. It's individuals doing it, and we come with our own experiences and perspectives. So, acknowledging that is something that I think people in many fields would benefit from, not just data science or machine learning,

Tell us about why you joined Arthur and what excites you about this opportunity.

Lizzie: I'm really excited about Arthur's mission to really provide insight into models from different perspectives. I think that many data scientists would benefit from having tools to inspect their models on a deeper level. It's something that is done in such an ad hoc way in my experience as a data scientist; everyone sort of has a different idea of what metrics you want to look at and what a model “performing well” means and what and the degree to which you want to be able to interpret the model. Everyone has different viewpoints on that kind of thing and I guess what I like about the idea behind Arthur's products is that everyone can have access to a kind of a baseline set of information. Increasing transparency through access to information is always good.

Kweku Kwegyir-Aggrey

Tell us a bit about yourself: where are you now, what are you studying, what are you up to these days?

Kweku: Yeah, I'm really happy to be joining the Arthur team. I did my undergrad at the University of Maryland where I studied computer science. When I finished, I started my PhD at Brown University where I'm working on some stuff between machine learning and cryptography.

I'm really motivated by problems in fairness, but maybe from a different perspective than the fairness community is broadly concerned with. I think one of the things that I'm really interested in and motivated by is how do we audit algorithms for fairness. How do we check the properties or behaviors of a classifier rather than trying to prove that the classifier is fair?

You’ve done some recent work on the topic of fairness but with applications of Optimal Transport. Why was that of interest to you?

Kweku: I actually think Optimal Transport is one of the most exciting things happening in Fairness right now. The Wasserstein distance had its glory days with the WGAN paper but I think one of the really interesting things about Optimal Transport broadly is that it enables us to engage with these more statistical fairness properties in a better way than we were able to before. For a lot of the kinds of fairness definitions they're given in terms of probabilities, but those probabilities aren't really the kind of linear or differentiable constraints we're used to plugging into a lot of our traditional machine learning setups. Luckily, the Wasserstein distance gives us the opportunity to make some of those quantities interface really nicely with classification and so we are able to compare classification outcomes in a really rich probabilistic way. This is kind of perfect for fairness because we can suddenly operate on all these comparisons, true positive false positive rates and so on.

What do you think are the most important, big-picture questions being tackled in ML Fairness?

Kweku: I think I was talking to my sister about this the other day about how if you apply for a credit card, sometimes they're able to automate the acceptance or rejection decision, and I can imagine that most of the companies are doing that have some kind of model in the background, automating this decision. All of a sudden, these decisions seem super innocuous where you apply and they let you know so quickly. Because of that speed, it makes it seem these decisions are low stakes, but they're not low stakes. They're really really important and they're kind of being trivialized in a way because of how often and easily they're being automated. So I think the big deal is that companies are really, really hungry for data to optimize their processes, and machine learning is the way that they turn that data into useful insight. And now we kind of have this wild wild west of decisions that are being made in a way that we really don't understand. It's important to be able to regulate how those decisions are being made so that they don't really disrupt a lot of the things that we're trying to fix with our societies today.

Tell us a little bit about what excited you about Arthur and this research opportunity.

Kweku: Yeah, I'm just really excited about a company actually getting their hands dirty with fairness problems in machine learning. I was just having a conversation with my buddy the other day that I've been kind of hungry to work on a project where I actually get to build something and make something. I think it's important to understand and study theory because it really is impactful on the language that we use to talk about these problems. But this problem domain is very specifically motivated by the real-world application so I'm really excited to actually see how a lot of these kinds of theories and ideas translate to practice. I think that's really exciting.

The analogy I had in the conversation with my buddy was that theory is kind of like making hammers, you know you need hammers to do things, but if you've made enough hammers build the house already you know?

.webp)