The Open Data Science Conference, or ODSC, is one of the largest gatherings of professional data scientists, and its attendees, presenters, and companies are shaping the present and future of the field. The goal of the conference is to bring together the global data science community in an effort to encourage the exchange of innovative ideas and the growth of open source software.

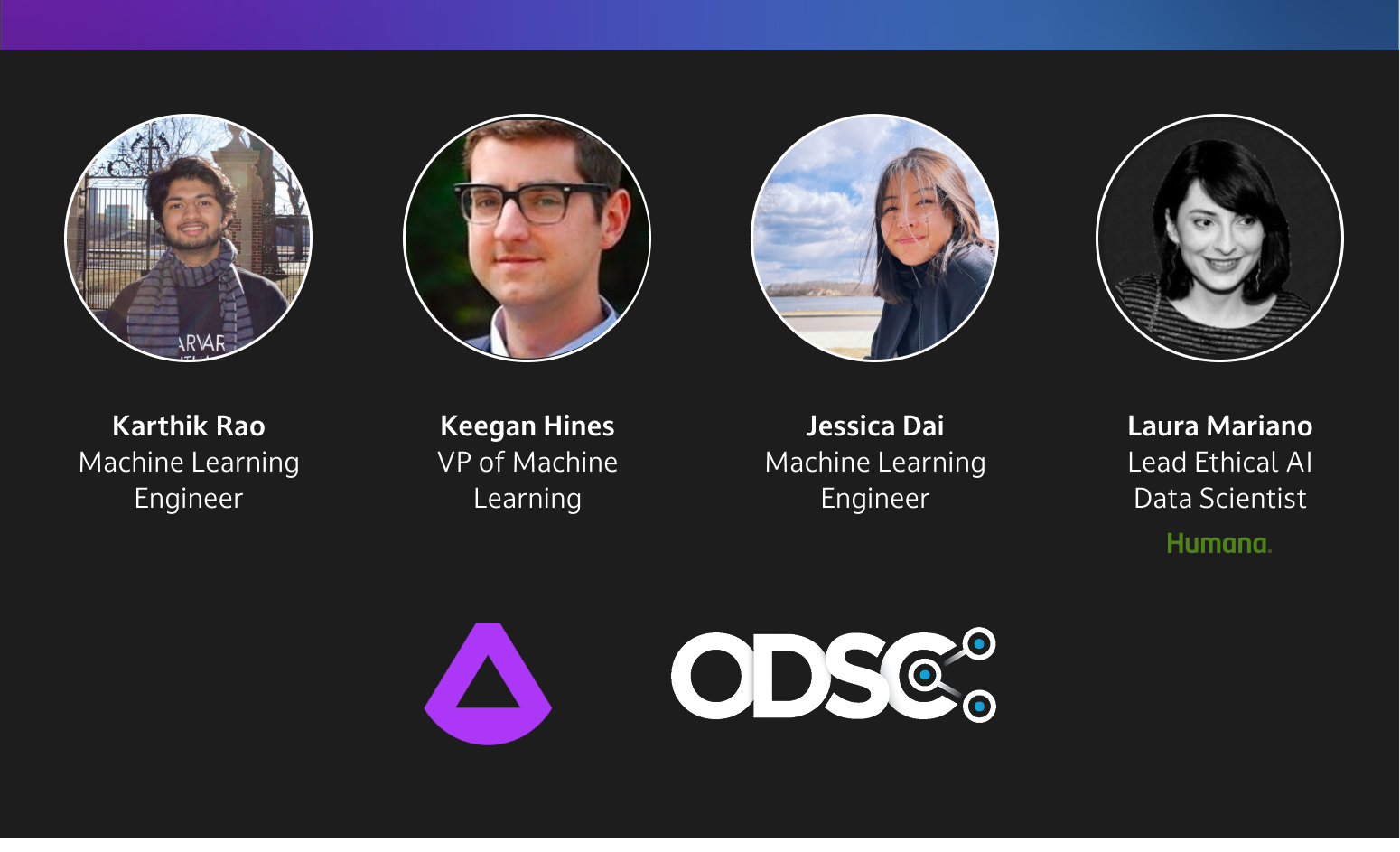

Last week, three members of Arthur’s ML Engineering team headed up to ODSC East in Boston as both attendees and as speakers. Keep reading for some recaps of their favorite talks that they attended as well as the ones they presented themselves based on their own research.

Unsolved ML Safety Problems

Dan Hendrycks, PhD Candidate at UC Berkeley

Though most business-critical machine learning systems today are relatively simple models, the research community has produced astounding results with large models such as GPT-3 and DALL-E, which often have surprising capabilities. In this talk, Dan Hendrycks, a PhD student at UC Berkeley, discussed some high-level approaches to the problem of ML safety. Hendrycks described three buckets for future research: Robustness, or the ability for ML systems to perform well even in unexpected or adversarial settings; Monitoring, or the ability to understand and anticipate new challenges; and Alignment, or the ability to coordinate the behavior of ML systems with human goals, values, and intentions. While the highest-stakes motivations for the talk, and the largest open questions, generally refer to large deep learning models, these challenges and goals are still relevant for simpler models.

Operationalizing Fair ML: From Industry to Research and Back

Jessica Dai, Machine Learning Engineer at Arthur (co-presented with Laura Mariano, Lead Ethical AI Data Scientist at Humana)

In both research and industry, discussion of “fair machine learning” has exploded in the past few years—yet there is often a gap between what is available in academia and the constraints and needs of a real-world organization. This talk was co-presented by Jessica Dai of Arthur and Laura Mariano of Humana, which has been an incredible partner of Arthur’s for years now. The two discussed Humana’s journey towards achieving informed, responsible use of machine learning to improve health outcomes. First, Humana implemented organizational and process-based tools for governance. Having set the stage for actively improving models, however, Humana’s data scientists then realized that none of the popular, published approaches to achieving fairness were applicable to their goals: the way Humana deployed and used machine learning violated assumptions made by many available “fair ML” methods. In the latter half of this talk, Jessica showed how these constraints motivated novel research questions and guided the development of an academic research project; explained and demonstrated the method we came up with; and discussed considerations as we folded this research work back into the product so that it was ultimately usable in a real-world production setting.

Utilizing NLP in the Context of COMP360 Psilocybin Therapy for Treatment-Resistant Depression

Gregory Ryslik, Executive Vice President, AI, Engineering, Digital Health Research & Technology at Compass Pathways

In this talk, Gregory Ryslik of Compass Pathways discussed how NLP techniques have been used in the process of setting up clinical trials for using psilocybin as an intervention against treatment-resistant depression. The magnitude of the problem is, of course, enormous; the talk focused on how this specific application setting, as well as the constraints it brought—such as working with nontechnical clinicians, privacy and regulatory concerns, and following the clinical trial process—all shaped the development of NLP approaches on the technical side.

Drift Detection in Structured and Unstructured Data

Keegan Hines, Vice President of Machine Learning at Arthur

Machine learning systems in production are subject to performance degradations due to many external factors and it is vital to actively monitor system stability and integrity. A common source of model degradation is due the inherent non-stationarity of the real-world environment, commonly referred to as data drift. In this presentation, Keegan described how to reliably quantify data drift in a variety of different data paradigms including Tabular data, Computer Vision data, and NLP data. Attendees of this talk came away with a conceptual toolkit for thinking about data stability monitoring in their own models, with example use cases in common settings as well as in more challenging regimes.

Simplifying MLOps by Taking Storage Out of the Equation

Miroslav Klivansky, Principal Data Architect, AI & Analytics at PureStorage

One of the biggest issues that enterprise companies face when trying to design and scale machine learning pipelines is worrying about the raw storage of the data. In this talk, speaker Miroslav Klivansky discussed the work he was doing at PureStorage, a flash storage company based in Palo Alto. He discussed how we need to think about handling large-scale data pipelines and having a data storage system that can handle the many different kinds of tasks from different machine learning models. The key takeaway from the talk was how we must differentiate between data and storage, and how they are not the same thing. He showcased a workflow (done at PureStorage) where we think about the different parts of the data pipeline abstracted away from the storage system, which yields cleaner and more efficient pipelines.

FastCFE: A Distributed Deep Reinforcement Learning Counterfactual Explainer

Karthik Rao, Machine Learning Engineer at Arthur

Counterfactuals, an active area of research in machine learning explainability, are explanations that produce actionable steps to move a data point from one side of a decision boundary to another. These explanations have a clear use case for several applications ranging from loan decisions to healthcare diagnosis, where they need to advise stakeholders about actions they can take to achieve a different outcome. Individuals not provided loans want steps they can take to achieve a loan, and similarly, patients want to know how they can achieve a better diagnosis.

In this presentation, Karthik Rao showcased FastCFE, an algorithm and feature that uses reinforcement learning to provide real-time counterfactual explanations. The presentation was broken down as follows:

- Overview of Counterfactuals and Reinforcement Learning (RL)

- Deep distributed reinforcement learning using OpenAI Gym and Ray+Rllib

- Benchmarks and Results

A Unified View of Trustworthy AI with the 360 Toolkits

Dr. Kush Varshney, Distinguished Research Staff Member and Manager at IBM Research

The final talk of the conference was given by Dr. Kush Varshney of IBM Research, discussing the open source initiatives that IBM has taken to develop trustworthy AI toolkits. This was a particularly enlightening talk and it is definitely beneficial to the AI community that large players such as IBM are committed to trustworthy initiatives and are even willing to open source these new technologies to help companies build trusted AI systems. If your organization has data scientists who can afford to invest the time and want to be truly hands-on, these IBM toolkits will work well for your team. Dr. Varshney explained three specific AI toolkits discussing:

- AI Fairness 360: Different methods to detect bias and use bias mitigation techniques

- AI Explainability: A toolkit providing out of the box explainable tools to explain models

- AI Adversarial Robustness: How to detect and handle adversarial data attacked on models

ODSC East was an incredibly rewarding experience for the Arthur team, and we’re thrilled that our engineers were able to share the results of the hard work they’ve been doing to advance the field of machine learning. We’re feeling grateful to have finally attended a conference in person after so long—and especially to have been able to co-present with one of our long-time partners, Humana, to amplify the story of their leading work. See you at ODSC 2023!

.webp)